Rub Rank: The Complete Guide to Fair and Transparent Evaluation

In a world that thrives on evaluations, performance measurement, and fair competition, Rub Rank has emerged as an essential tool for transparent and standardized ranking. Whether in education, corporate performance reviews, creative content evaluation, or even gaming competitions, rubric-based ranking ensures that everyone is judged against the same criteria, reducing bias and improving clarity.

This article from Trend Loop 360 explores what Rub Rank is, how it works, its applications, benefits, and challenges, and how you can create and implement an effective rub ranking system for your needs.

What is Rub Rank?

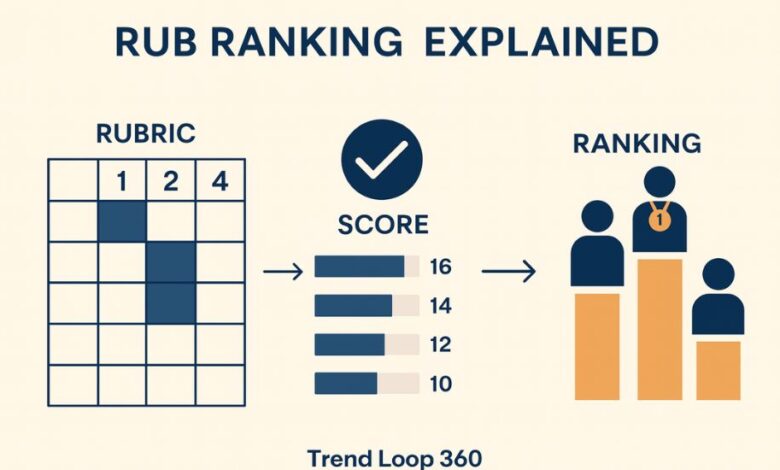

Rub Rank refers to the process of evaluating and ranking individuals, projects, performances, or products based on a rubric—a structured set of predefined criteria. Each participant is scored against the same standards, and the results are compiled to produce a ranking list.

The word “rub” comes from rubric, while “rank” reflects the final ordered result. This combination ensures a quantitative and qualitative assessment that is fair, consistent, and transparent.

Why Rub Rank is Important

Without a standardized ranking system, evaluations can become inconsistent, subjective, and even biased. Rub Rank solves this problem by:

-

Setting clear expectations.

-

Using uniform scoring for all.

-

Providing evidence-based feedback.

-

Ensuring comparability between participants.

For example, in an academic setting, if two students present projects, Rub Rank ensures they are evaluated against the same points—such as creativity, accuracy, and presentation quality—rather than relying solely on a teacher’s impression.

Core Components of Rub Rank

A successful Rub Rank system relies on several important elements:

Criteria

The foundation of Rub Rank is a clear list of criteria—aspects to be evaluated. Examples:

-

Content Quality

-

Creativity

-

Accuracy

-

Presentation Skills

-

Technical Skills

Scoring Scale

A numerical scale (e.g., 1–5 or 1–10) helps rate each criterion. For example:

-

5 – Excellent

-

4 – Good

-

3 – Average

-

2 – Needs Improvement

-

1 – Poor

Weighting

Not all criteria are equally important. Weighting assigns greater importance to some categories. For example:

-

Creativity: 25%

-

Technical Skills: 30%

-

Presentation: 20%

-

Accuracy: 25%

Aggregation

The weighted scores for each criterion are combined to get a final score for each participant.

Ranking

Finally, all participants are ordered from highest to lowest score, producing the Rub Rank list.

How Rub Rank Works – Step-by-Step

Here’s a breakdown of the process:

-

Define the Goal – Decide what you’re ranking (e.g., student essays, company performance, competition entries).

-

Create the Rubric – Establish clear, measurable criteria.

-

Assign Weightings – Prioritize certain criteria if necessary.

-

Set the Scoring Method – Use a numeric scale or descriptive scale.

-

Evaluate – Score each participant according to the rubric.

-

Calculate Totals – Apply weightings and sum up scores.

-

Rank Participants – Order results to create the final Rub Rank list.

Applications of Rub Rank

Rub Rank can be applied in various fields:

Education

Teachers use Rub Rank for:

-

Essay grading

-

Project evaluations

-

Oral presentations

-

Research assignments

Corporate & HR

Companies apply Rub Rank to:

-

Employee performance reviews

-

Team productivity analysis

-

Promotion eligibility

-

Training evaluation

Creative Content Evaluation

Judges in competitions or editors in publishing use Rub Rank for:

-

Artwork contests

-

Music performances

-

Writing competitions

-

Video content reviews

Sports & Gaming

In sports and esports, Rub Rank helps:

-

Assess player performance

-

Rank tournament competitors

-

Evaluate skill improvements

Research & Innovation

Research proposals and scientific projects are ranked based on:

-

Feasibility

-

Originality

-

Methodology

-

Potential impact

Benefits of Rub Rank

Fairness and Transparency

Everyone knows the evaluation criteria and how the score is calculated.

Consistency

Different evaluators can produce similar results by following the same rubric.

Actionable Feedback

Participants understand exactly where they excel and where they need improvement.

Reduced Bias

Objective scoring reduces personal preferences from influencing results.

Scalability

The same method works for small teams or large competitions.

Challenges in Rub Rank

Poorly Designed Rubrics

If criteria are vague or irrelevant, results lose credibility.

Overemphasis on Numbers

Quantitative scores might overlook qualitative aspects like emotional impact or creativity.

Rater Bias

Even with a rubric, subtle biases can still influence scoring.

Time-Consuming

Detailed scoring takes more time than simple subjective judgments.

Best Practices for Creating a Strong Rub Rank

-

Make Criteria Clear – Use simple, understandable language.

-

Be Specific – Avoid vague descriptions like “good” or “bad.”

-

Balance Criteria – Don’t overload one category unless necessary.

-

Pilot Test the Rubric – Try it out before official use.

-

Use Multiple Evaluators – Combine scores for more accuracy.

-

Review and Update – Keep your Rub Rank relevant and current.

Example of a Rub Rank System

Let’s say a school is holding a Science Fair and needs to evaluate projects.

| Criteria | Weight (%) | Student A | Student B | Student C |

|---|---|---|---|---|

| Creativity | 25% | 9 | 8 | 7 |

| Accuracy | 25% | 8 | 9 | 8 |

| Presentation | 20% | 9 | 7 | 6 |

| Technical Skills | 30% | 8 | 9 | 7 |

Final Weighted Score:

-

Student A – 8.45 → Rank 1

-

Student B – 8.30 → Rank 2

-

Student C – 6.95 → Rank 3

This is the Rub Rank output.

Rub Rank in the Digital Era

With AI and automation, Rub Rank has become faster and more consistent. Many tools now:

-

Automate score calculation

-

Provide instant rankings

-

Store historical performance data

-

Allow multi-judge scoring

In the digital workplace, Rub Rank is now integrated into:

-

Learning Management Systems (LMS)

-

HR performance dashboards

-

Online competition platforms

Future of Rub Rank

The future of Rub Rank is closely tied to AI-driven evaluation systems. Soon, algorithms could:

-

Analyze work automatically

-

Suggest improvements

-

Predict ranking outcomes

-

Customize feedback

However, human judgment will still play a key role, especially in areas requiring creativity, ethics, and cultural sensitivity.

Conclusion

Rub Rank is more than just a scoring tool—it’s a framework for fairness, consistency, and actionable feedback. From classrooms to corporate boardrooms, and from art contests to esports tournaments, Rub Rank creates a level playing field where performance can be evaluated objectively.

By following clear criteria, fair scoring, and transparent ranking, you can ensure that every evaluation is not just a judgment—but a pathway to growth.

As Trend Loop 360 emphasizes, in any competitive or evaluative environment, a well-designed Rub Rank system is the bridge between subjective opinion and objective truth.

For more educational articles visit education section.